In this post, we provide a proof of Kirchhoff’s Matrix Tree theorem [1] which is quite beautiful in our biased opinion. This is a 160-year-old theorem which connects several fundamental concepts of matrix analysis and graph theory (e.g., eigenvalues, determinants, cofactor/minor, Laplacian matrix, incidence matrix, connectedness, graphs, and trees etc.). There are already many expository articles/proof sketches/proofs of this theorem (for example, see [4-6, 8,9]). Here we compile yet-another-expository-article and present arguments in a way which should be accessible to the beginners.

Statement

Suppose is the Laplacian of a connected simple graph

with

vertices. Then the number of spanning trees in

, denoted by

, is the following:

the product of all non-zero eigenvalues of

.

Before we proceed to the proof, let us give some definitions. We assume that some elementary concepts of linear algebra (e.g., rank, determinant, eigenvalue/eigenvector, minor/cofactor, etc.) are known to the reader. However, we use some known “facts” that we do not prove in this article.

The proof has two main parts:

Part 1: We prove that the number of spanning trees in a connected simple graph is equal to any cofactor of the Laplacian matrix of that graph. This is the heart of the theorem/proof.

Part 2: Next we use standard linear algebra results to relate the cofactor of any matrix and its eigenvalues. Since the matrix we use is the graph Laplacian, it readily relates the eigenvalues (of the Laplacian) with the number of spanning trees (using the result from previous part). This part can be found in any standard text in matrix analysis; for example: see Theorem 1.2.12, and Definitions 1.2.5 and 1.2.9 in the Horn and Johnson book [7].

Now we mention some preliminaries.

Preliminaries

(For concepts that are considered basics in linear algebra, we provide hyperlinks to detailed exposition.)

Let be a graph on

vertices and

edges.

is the

adjacency matrix of

with zeros in the main diagonal and the entry

whenever the edge

exists, and

when the edge

does not exist.

Let be the

diagonal matrix whose

entry is the degree of the vertex

. The combinatorial Laplacian, or simply Laplacian, is the

matrix

. Note that sum of all entries in any row of

is

(why?); that is why the constant vector

is an eigenvector of

with eigenvalue

. Since all eigenvalues of

are non-negative,

must be the smallest eigenvalue of

.

Let be the

incidence matrix where columns correspond to edges and rows correspond to vertices, and any column

, corresponding to the edge

), contains zeros at all rows except at two positions:

and

. Note that

can be written as the “square” of the incidence matrix; that is,

.

Let be the cofactor of the

diagonal element of a square matrix. Let

be the

principal minor of any square matrix

.

Now let us mention some facts of which we will not present proofs.

Some Facts from Matrix Analysis

Fact F1. Let be the

submatrix obtained from the

incidence matrix

by removing its

row. Then, the cofactor of the

diagonal element of the Laplacian

is the following:

Proof: See the “Omitted Proofs” section.

Fact F2. Cauchy-Binet formula for determinant, which can compute the determinant of the product of two matrices, say , from the determinants of the submatrices of

and

.

Fact F3. (Unused)

Fact F4. The product of all non-zero eigenvalues of the combinatorial Laplacian is equal to the sum of all principal minors of

. That is,

where is the

principal minor of

.

Proof: See the “Omitted Proofs” section below.

F5. Diagonal cofactors of an square matrix are equal to corresponding principal minors. That is,

.

Proof(of F5): Same as the proof sketch of F4.

Now we will establish some relationship between the incidence matrix and the connectedness of

.

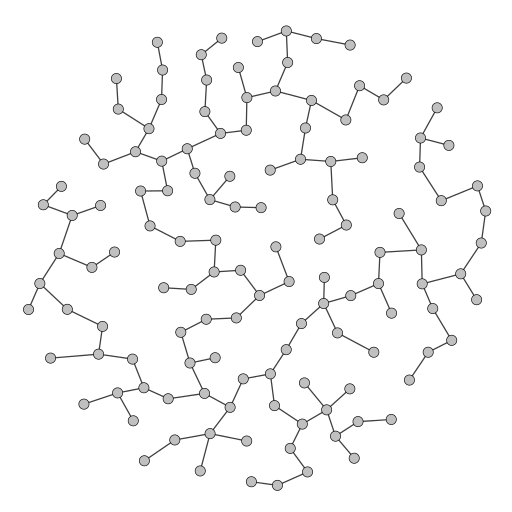

Rank of submatrices of the Incidence Matrix

Note that each column of the incidence matrix has exactly one and one

. Let us define the row sum of a column of any matrix as the sum of all entries in that column. Similarly, let the row sum of a matrix be the sum of all its entries. Now note that if

is connected, each column of the incidence matrix

contains exactly two non-zero entries

and

, and thus row sum at each column is zero. Now, this implies that the sum of all row vectors of

is the zero-vector and hence any row can be expressed as a linear combination of all other rows. Therefore, rows of

are linearly dependent, which means the rank of

must be smaller than

. Thus we have the following claim:

Proposition P1. If is connected and

is its incidence matrix, then

.

Now consider a subgraph of

on

vertices such that

. This means, all rows of

are linearly independent, and thus there is at least one column in

for which the row-sum is not zero. This implies that there is at least one edge

which connects a vertex in

to a vertex outside

. In other words,

cannot be a connected component of

. Therefore, we have the following claim:

Proposition P2. If the rank of the incidence matrix is

for a

-vertex subgraph

of

, then

is connected.

Now we will prove an important fact about the determinant of any square submatrix of

.

Lemma L0. The determinant of any square submatrix of

is either 0 or

.

Proof: See the “Omitted Proofs” section.

Rank of  and Connectedness of

and Connectedness of

Let be a non-empty submatrix obtained from

by removing exactly one row from

. Note that at least one column of

will have non-zero row sum. Therefore, rows of

will be linearly independent, and

must be greater than or equal to the number of rows of

. Since

has

rows, it follows that

.

However, the rank of the incidence matrix must be greater than or equal to the rank of any row-subset of

, such as

. Since the largest row-subset of

will have

rows, we can have the following claim:

Proposition P3. If is connected and

is its incidence matrix, then

.

Now we will prove the following lemma.

Lemma L1. (Rank of the Incidence matrix of a connected graph) Let be the incidence matrix of graph

. Then,

is connected

.

Proof: See the “Omitted Proofs” section below.

The Connection between Spanning Trees and the Incidence Matrix

The subgraph will be a spanning tree of the graph

if it has the following properties:

has

vertices and

edges.

is connected.

- The incidence matrix

, whose columns correspond to the

edges of

, has

rows.

Let us consider , which is a

matrix. Since

is connected, there will be no row with all zero entries, and according to Lemma L1 we know that

. This means any collection of

rows of

are linearly independent. Consequently, any row-subset

of

containing

rows (and all

columns) will have rank

. Now, since

is a square matrix with

rows and

columns with rank

, it follows that

. Therefore we have proved the following lemma:

Lemma L2. (Determinant of an order- square submatrix of

)

If is the incidence matrix of a spanning tree

of

, every

square submatrix of

is non-singular.

The above Lemma also leads us to the following elegant result.

Lemma L3. (Spanning tree of and incidence matrix

) Let

be a graph with

vertices, and let

be its incidence matrix. Let

be a subgraph of

with

edges, and let

be its incidence matrix. Then,

is a spanning tree of

every

square submatrix of

is non-singular.

Proof: See the “Omitted Proofs” section below.

We can readily get the following corollary.

Corollary C1.

Each non-singular square submatrix of

corresponds to a spanning tree of

induced by its columns.

Proof: See the “Omitted Proofs” section.

The above corollary leads us to the following simple yet beautiful result.

Lemma L4. (Number of spanning tree and the incidence matrix )

Let be a submatrix of

derived by removing the

row from

. Thus

has

rows and

columns. Then, the number of spanning trees in

, denoted by

, is equal to the number of non-singular

submatrices of

.

Proof: See the “Omitted Proofs” section below.

Let us call the Reduced Incidence Matrix, derived by removing the

row from

. Thus the question of computing

, the number of spanning trees in

, is equivalent to computing the number of non-singular

square submatrices of the reduced incidence matrix

.

Now we can proceed to the proof of the main theorem.

Proof of the Main Theorem

First, let us briefly state what we are going to prove. Let be the

eigenvalues (counting multiplicities) of the combinatorial Laplacian

of the graph

. We want to prove that

.

We will proceed as follows.

Step 1: (Submatrix from

) Let

be an

submatrix of the incidence matrix

by deleting the

row of

. Using Fact F1, we see that

.

Note that has

rows and

columns. We want

Step 2: (Square submatrices of of order

) Now we will build all

submatrices from

as follows. Let

be any ordered sequence of

integers picked from

such that

. Let

be the set of all such sequences

. Now, given a particular sequence

, let

be the

submatrix of

obtained by keeping only those columns from

whose index are in

.

Step 3: (Apply Fact F2) We want to evaluate the determinant from Step 1 using Cauchy-Binet formula, as follows:

Step 4: (Apply Lemma L0) must be either

or

, and hence the above equation becomes the following:

Step 5: (Apply Lemma L4) By Lemma L4, the number of spanning trees in is the same as the number of non-singular

square submatrices of

. Therefore, the above equation becomes

.

Step 6: Note that the value of does not depend on

, and thus each diagonal cofactor of the combinatorial Laplacian

is equal to

.

.

Step 7: (Use Facts F4, F5) Restating Fact F4, we have,

Thus, we have proved Kirchoff’s Matrix-Tree theorem.

Omitted Proofs

Fact F1. Let be the

submatrix obtained from the

incidence matrix

by removing its

row. Then, the cofactor of the

diagonal element of the Laplacian

is the following:

Proof sketch (of F1): First note that for unweighted graphs, and hence, every

entry of

comes from the dot product of the

row and

column of

. Now, the

matrix

will not have any contribution from the

row and

column of

. This is exactly what happens when computing

: First we take the

principal minor by removing the

row/column from

and then take determinant. (The sign of

will be

, which is positive.)

Fact F4. The product of all non-zero eigenvalues of the combinatorial Laplacian is equal to the sum of all principal minors of

. That is,

where is the

principal minor of

.

Proof sketch (of F4): Since eigenvalues are solutions to the characterstic polynomial of a square matrix

, they can be related to the coefficients of

by using elementary symmetric polynomials. Next, the coefficients of

can be expressed as the sum of principal minors of

. Details can be found in any standard matrix analysis textbook, e.g., Chapter 0 of [7].

Lemma L0. The determinant of any square submatrix of

is either 0 or

.

The following proof is taken from the Masters Thesis of Boomen [6].

Proof: The proof is by induction.

(Base case) First observe that only two nonzero entries appear at each column of , and one of them is

and the other is

. Now consider any

submatrices of

where each entry is either 0 or

and the nonzero entries in a column are different. It can be checked that the value of the determinant of this submatrix can only be one of

. This forms the basis of our induction.

(Induction hypothesis) Assume that the determinant of every square submatrix of of order smaller than

is either 0 or

.

(Induction) Suppose be a

square submatrix of

. We want to show that the

.

Think about Laplace expansion of the determinant of . There can be only three cases:

- Case 1: If there is any row/column with all zeros,

and we are done.

- Case 2: If any row/column of

contains exactly one nonzero entry (

), we expand

along that row/column (which will have only one cofactor) and by applying the inductive hypothesis, the result is

which is either 0 or

, and we are done.

- Case 3: If all columns of

contain exactly two nonzero entries (one 1 and the other -1), then if we add all rows the sum will be the zero vector. Hence

and therefore

, and we are done.

Lemma L1. (Rank of the Incidence matrix of a connected graph) Let be the incidence matrix of graph

. Then,

is connected

.

Proof:

(The part.) From Propositions 1 and 3, we see that if

is connected, then

, which means

.

(The part.) It given that

. Therefore, there exists a

submatrix of

, call it

, whose rank is

. Suppose

is the incidence matrix of the subgraph

of

on

vertices. Since

, we can apply Proposition 4 and hence

is connected.

Lemma L3. (Spanning tree of and incidence matrix

) Let

be a graph with

vertices, and let

be its incidence matrix. Let

be a subgraph of

with

edges, and let

be its incidence matrix. Then,

is a spanning tree of

every

square submatrix of

is non-singular.

Proof:

(The part.) Apply Lemma L2.

(The part.) If every

submatrix

of the

matrix

is non-singular, it implies that

. Therefore, applying Proposition 2 we can see that

is connected. Since

has exactly

edges on

vertices,

must be a tree. Hence,

is a spanning tree.

Corollary C1.

Each non-singular square submatrix of

corresponds to a spanning tree of

induced by its columns.

Proof:

Let be a connected graph. Let

be a non-singular

square submatrix of

and let

be the subgraph for which

is the incidence matrix. Assume, without loss of generality, that

. Since

(because it is non-singular), at least one of its columns will have non-zero row-sum and thus one of its edges must be incident to the vertex

such that

. Thus the subgraph

is connected, has

vertices and

edges, and thus it is a spanning tree of

and has the same edges that correspond to the columns of

.

Lemma L4. (Number of spanning tree and the incidence matrix )

Let be a submatrix of

derived by removing the

row from

. Thus

has

rows and

columns. Then, the number of spanning trees in

, denoted by

, is equal to the number of non-singular

submatrices of

.

Proof:

By Corollary C1 each non-singular submatrix

of

corresponds to a spanning tree. However, since

has

rows, each

has a different set of edges, and thus corresponds to a different spanning tree. Therefore the number of spanning trees in

is the same as the number of non-singular submatrices of

with dimension

.

References

[1] Kirchhoff, G. Über die Auflösung der Gleichungen, auf welche man bei der untersuchung der linearen verteilung galvanischer Ströme geführt wird. Ann. Phys. Chem. 72, 497-508, 1847.

[2] Deo, N., Graph theory wity applications to Engineering and Computer Science, Prentice Hall, Englewood Clis, New Jersey, 1974.

[3] Lankaster, P., Tismenetsky, M., Theory of matrices : with applications, 2nd edition, Academic Press, 1985.

[4] Butler, S. K., Eigenvalues and Structures of Graphs, PhD Dissertation, University of California at San Diego, 1-9, 2008.

[5] Brualdi, R. A., The Mutually Beneficial Relationship of Graphs and Matrices, American Mathematical Society, 21-22, 2011.

[6] Boomen, J., The Matrix Tree Theorem, Masters thesis, Radboud Universiteit Nijmegen, Netherlands, 2007.

[7] Horn, Roger A., and Charles R. Johnson. “Matrix Analysis. 1985.” Cambridge, Cambridge.

[8] Srivastava, Nikhil. Matrix Tree Theorems (Lecture Notes). http://www.cs.yale.edu/homes/spielman/561/2009/lect18-09.pdf

[9] Oveis Gharan, Shayan. Random Spanning Trees (Lecture Notes). https://homes.cs.washington.edu/~shayan/courses/cse599/adv-approx-1.pdf

Reblogged this on Deli Zhang.

Hi Saad, you must be interesting in my article — The Formula for the Equivalent Resistance of Complex Resistance Network

It’s a typical application of Metrix-Tree theorem, I just can say wanderful. Please see https://zhangdeli.wordpress.com/

Helo, I’m Phương Hoa. I’m come from Viet Nam. And I can’t find and download: [7] Horn, Roger A., and Charles R. Johnson. “Matrix Analysis. 1985.” Cambridge, Cambridge. So can you proof F4 and F5? It’s very important for me. Please!

I am fairly certain that there is a typo here, “Let us consider B_T, which is a n\times m matrix. Since T is connected, according to Lemma L1 we know that rank(B_T)=n-1, which means the row-sum of B_T is not zero, and hence any set of n-1 rows of B_T are linearly independent. ” B_T is connected without an edge leading out of it (every column sums to zero), therefore it does row reduce to zero, but does still have n-1 linearly independent vectors, just not n linearly independent vectors. Let my know if I have misinterpreted what B_T is.

Also is B_T n x n-1 or n x m, or n x m where m = n – 1. seemed a little unclear.

Indeed, has dimension

has dimension  . I have fixed that. Moreover, you are right about the row-sum of

. I have fixed that. Moreover, you are right about the row-sum of  , it is zero. What I wrote was incorrect. I updated the lines as follows:

, it is zero. What I wrote was incorrect. I updated the lines as follows: , which means any collection of

, which means any collection of  rows of

rows of  are linearly independent.”

are linearly independent.”

“according to Lemma L1 we know that

Sorry for the late reply.

But this is a really cool proof thank you!

Thanks. But it is not “my” proof: I collected the pieces together from other proofs. Thanks for your comments.