Hi, I’m Saad, a software engineer at Google. Welcome to my blog!

Here’s my curriculum vitae (updated February 2022).

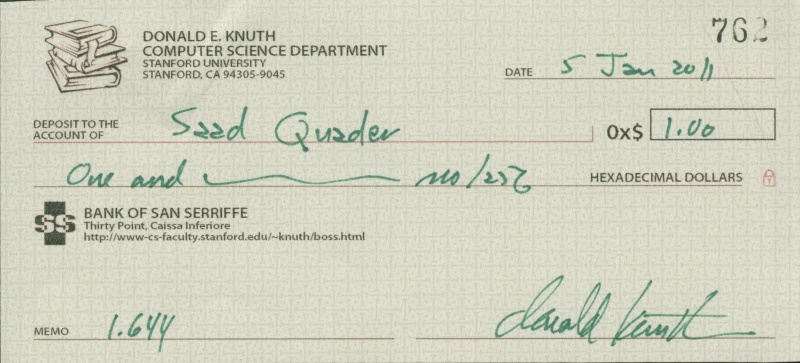

This is one of my prized possessions, a 0x$1 check from Donald Knuth’s Bank of San Serriffe!

On a Random Walk

Hi, I’m Saad, a software engineer at Google. Welcome to my blog!

Here’s my curriculum vitae (updated February 2022).

This is one of my prized possessions, a 0x$1 check from Donald Knuth’s Bank of San Serriffe!

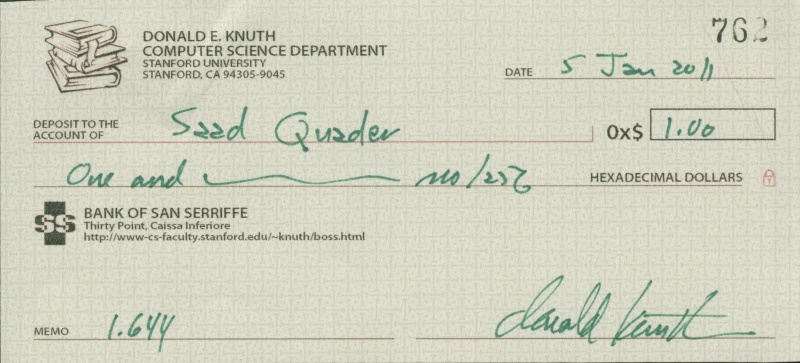

Consider the eventual consensus Proof-of-Stake (PoS) blockchains under the longest-chain rule such as Ouroboros, Ouroboros Praos, Sleepy Consensus, Snow White, etc. All existing analyses use a sub-optimal honest majority assumption in that if multiple honest nodes win the block-creation lottery in the same round, the analysis either treats it as a bad event or, at best, a neutral event.

In our paper, we put forth a consistency analysis that takes advantage of these events and thus achieves an asymptotically optimal consistency error under the optimal honest majority assumption; this is a first in the literature. The analysis applies to both synchronous communication and communications with a bounded delay.

This improvement is important since a sub-optimal honest majority assumption leads to weak security parameters for the blockchain system. The paper is going to appear at the prestigious conference IEEE ICDCS 2020. We posted the full version of our paper at https://eprint.iacr.org/2020/041.

In one of my papers, I needed an upper bound on the fractional moments of the geometric distribution. The integer moments have a nice-looking upper bound involving factorials. In addition, Mathematica showed that the same upper bound holds for fractional moments once we use Gamma function instead of factorials. The problem was, I could not prove it. Worse, I could not find a proof after much looking on the Internet.

This post contains a proof that for any real , the

th moment of a geometric random variable with success probability

is at most

.

Continue reading “Fractional Moments of the Geometric Distribution”

I’m pleased that our paper, titled “Linear consistency for proof-of-stake blockchains,” has been accepted at SODA 2020.

What is the Confirmation Time for Blockchains?

When you are selling a pizza and accepting Bitcoin, usually you wait “for a while” before you “confirm the transaction” and deliver the pizza. You are cautious because a fraudulent user may double spend—use the same bill to pay two different vendors.

Continue reading “Our SODA Paper on Proof-of-stake Blockchains”

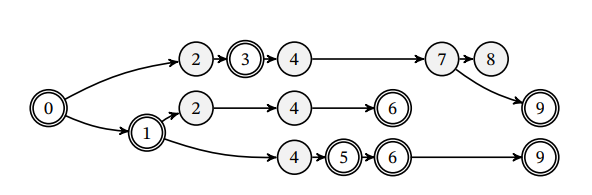

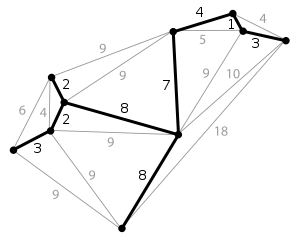

Our paper, titled How to Realize a Graph on Random Points, has been accepted at CCCG (Canadian Conference on Computational Geometry) 2019. I’ll write more about it in future posts.

Abstract:

Continue reading “Our Paper on Realizing a Graph on Random Points”

I finished listening to the book “Endure” by Alex Hutchinson. It is one of the most important books that I have read. I have had multiple important realizations from it.

Brain sets an expectation. Giving up is almost always a choice.

Brain sets an expectation. You know it or not, feel it or not, your brain sets an expectation at a physiological as well as a psychological level. Try as we may, the brain controls your actions (again, at both the physiological and psychological levels) so that you meet the expectation, but don’t exceed it.

Therefore, these expectations matter. Sometimes these expectations are conscious, in that we can spell them out. Other times, they are at a subconscious or even unconscious level. Are you aware of what you expect of yourself? Expect does not mean hope: what do you really, really expect of yourself?

Continue reading “My Takeaways from “Endure,” by Alex Hutchinson”

I gave a talk in our seminar about the proof-of-work vs. the proof-of-stake blockchain paradigm. Although I don’t have an audio/video recording, here is a Google Slides rendering of my original Powerpoint slides. Some of the animations are out of place/order, but in general, it feels okay.

I intended this talk to be accessible in nature, so I intentionally skipped many details and strived not to flaunt any equation in it.

Advertised Summary: Bitcoin is a blockchain protocol where finalized transactions need a “proof of work”. Such protocols have been criticized for a high demand for computing power i.e., electricity. There is another family of protocols which deals with a “proof of stake”. In these protocols, the ability to make a transaction depends on your “stake” in the system instead of your computing power. In both cases, it is notoriously difficult to mathematically prove that these protocols are secure. Only a handful of provably secure protocols exist today. In this talk, I will tell a lighthearted story about the basics of the proof-of-work vs. proof-of-stake protocols. No equations but a lot of movie references.

Please enjoy, and please let me know your questions and comments.

In this note, we are going to state the PCP theorem and its relation to the hardness of approximating some NP-hard problem.

Intuitively, a PCP (Probabilistically Checkable Proof) system is an interactive proof system where the verifier is given random bits and he is allowed to look into the proof

in

many locations. If the string

is indeed in the language, then there exists a proof so that the verifier always accepts. However, if

is not in the language, no prover can convince this verier with probability more than

. The proof has to be short i.e., of size at most

. This class of language is designated as PCP[r(n), q(n)].

Theorem A (PCP theorem). Every NP language has a highly efficient PCP verifier. In particular,

.

Continue reading “Notes on the PCP Theorem and the Hardness of Approximation: Part 1”

A blockchain protocol is essentially a distributed consensus protocol. A Proof-of-Work protocol such as Bitcoin requires a user to show a proof — such as making a large number of computations — before he can add a block to an existing chain. Proof-of-Stake protocols, on the other hand, would not require “burning electricity” since the ability to “mine” a coin would depend only on the user’s current stake at the system.

The growing computing power of the bitcoin miners is already consuming a significant amount of electricity. One can easily see the necessity of a provably secure and efficient cryptocurrency without the heavy energy requirement. However, it is easier said than done. So far, I am aware of only three Proof-of-Stake protocols which give provable security guarantees. These are Ouroboros, led by Aggelos Kiayias, Alex Russell, and others; Snow White, led by Rafael Pass and Elaine Shi; Ouroboros Praos from the Ouroboros team; and Algorand, led by Silvio Micali. There is also an open-source initiative to implement Ourorboros, named Cardano.

In this post, I am going to present the main theorems of Ouroboros.

Continue reading “Ouroboros Proof-of-Stake Blockchain Protocol: Assumptions and Main Theorems”